Understanding GANs and Working with Code in Python

Learn the basics of Generative Adversarial Networks (GANs) and how they work for image generation with basic code in Python

Hey readers, I’m Shiwanshi, and this is my first blog on Hashnode. Being an Artificial Intelligence student, I was so happy when I learned about Generative Adversarial Networks (GANs) long before it went viral. So, let's understand what GANs are and why they are becoming so famous.

GANs have emerged as powerful tools that can generate astonishingly realistic images and push the boundaries of creativity. One such application of GAN is Drag Your GAN. This immersive technology has been viral all over social media, illustrating how you can only use a single mouse pointer to change the context of the entire image. This is a blow to Photoshop!

Before understanding how Drag your GAN works, we need to have a solid concept of the working of GANs. I’ll also give a basic understanding of how to code GANs in Python. So let's get started.

What are Neural Networks in GANs?

To first understand GANs in Python, let us have a quick overview of neural networks in Deep Learning.

Neural Networks are a representation of the biological neural networks that are similar to the structure of the human brain. In simpler terms, they are the brains of the machines!

Let's simplify it by using shorter sentences. Imagine your brain as a network of linked neurons. These neurons exchange information while forming connections with one another. Similar to real neurons, artificial neurons known as "artificial neurons" or "perceptrons" make up neural networks, which function similarly.

The layers of these artificial neurons generally consist of an input layer, one or more hidden layers, and an output layer. Each neuron receives inputs, processes those inputs with preset weights, and generates an output. It functions mathematically, changing inputs into valuable outcomes.

Let us compare the structure of a neuron and a neural network:

Dendrites: Inputs

Cell nucleus: Nodes

Axon: Weight connections between artificial neurons

Synapse: Outputs

Artificial Neural networks (ANN) can recognize intricate patterns and connections in data. They can identify things in photos, comprehend and produce human language, predict trends, give advice, and much more. Neural Networks aim to mimic the network of the neurons that make up the human brain so that computers can understand and make decisions in a human-like manner.

Understanding GANs

Generative Adversarial Networks (GANs) are an approach to generative modelling that has attracted much interest for their extraordinary capacity to produce high-quality, realistic synthetic data.

Generative modelling is an unsupervised machine learning task that automatically identifies and learns the regularities or patterns in input data so that the model can produce new examples that could have been reasonably derived from the original dataset.

Generative Adversarial Networks (GANs) comprise two essential neural networks: a generator network nue and a discriminator network that operate in an adversarial training process to create and assess data.

They are based on a game theory in which two entities compete, the generator and the discriminator. The generator aims to produce synthetic data, such as photographs, whereas the discriminator's job is to distinguish between actual data from a training set and generated data.

Let's imagine GANs as a dynamic pair doing a creative dance to comprehend them better. The discriminator assumes the position of an astute art critic, while the generator represents an individual artist attempting to produce unique works of art. The generator creates artificial information, such as text or graphics, that closely mimics real information from a training set. The discriminator, on the other hand, seeks to discern between authentic and false data.

Working of GANs for Image Generation

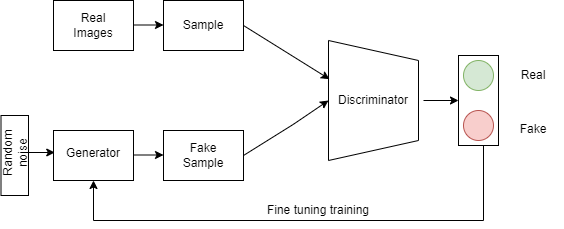

Let us understand nicely the roles of the two neural networks:

Generator: The generator begins training with random noise as input, a starting point for creating images. This noise may be considered a collection of random values the generator processes.

Random Noise Generation in Python:

import numpy as np # Generating random noise latent_dim = 100 # Dimension of the noise vector num_samples = 10 # Number of samples to generate # Generate random noise vector noise = np.random.normal(0, 1, (num_samples, latent_dim))The generator comprises layers of artificial neurons that create a neural network architecture. These neurons are in charge of turning the random noise into exciting pictures. The generator progressively learns to modify its internal parameters, especially weights and biases, while training continues.

Generator in Python:

from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Reshape # Generator network architecture generator = Sequential() generator.add(Dense(256, input_dim=latent_dim, activation='relu')) generator.add(Dense(512, activation='relu')) generator.add(Dense(784, activation='sigmoid')) generator.add(Reshape((28, 28)))Discriminator: The discriminator tells the difference between real samples and those produced by the generator i.e. fake samples. Like the generator, the discriminator has a network of synthetic neurons. It takes an image real or generated, and predicts whether it is from the real sample or the fake one by the generator.

Discriminator in Python:

from tensorflow.keras.layers import Flatten # Discriminator network architecture discriminator = Sequential() discriminator.add(Flatten(input_shape=(28, 28))) discriminator.add(Dense(512, activation='relu')) discriminator.add(Dense(256, activation='relu')) discriminator.add(Dense(1, activation='sigmoid'))The discriminator struggles at first to differentiate between the two. But as the training goes on, it learns to better tell real images apart from fake ones.

Compiling and Training GAN:

from tensorflow.keras.optimizers import Adam # Compiling the discriminator discriminator.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0002), metrics=['accuracy']) # Combining the generator and discriminator into a GAN gan = Sequential([generator, discriminator]) gan.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0002)) # Training the GAN epochs = 100 batch_size = 32 for epoch in range(epochs): # Select a random batch of real images real_images = X_train[np.random.randint(0, X_train.shape[0], batch_size)] # Generate a batch of fake images noise = np.random.normal(0, 1, (batch_size, latent_dim)) generated_images = generator.predict(noise) # Concatenate real and fake images X = np.concatenate((real_images, generated_images)) # Labels for the generated and real images y = np.concatenate((np.ones((batch_size, 1)), np.zeros((batch_size, 1)))) # Train the discriminator discriminator_loss = discriminator.train_on_batch(X, y) # Train the generator noise = np.random.normal(0, 1, (batch_size, latent_dim)) y_gen = np.ones((batch_size, 1)) generator_loss = gan.train_on_batch(noise, y_gen)

Let's now examine the relationship between the generator and discriminator during training:

Training Step 1:

The generator receives a random noise as an input, a set of random values, and generates a synthetic image.

A combination of real images from the dataset and generated images are sent to the discriminator. Each picture is assessed, and a verdict on whether it is genuine or fake is made.

The generator receives input from the discriminator as fine-tuning training on how well it did. The input helps the generator identify areas where the created picture needs to be improved.

Training Step 2:

- The generator evaluates the discriminator's feedback and modifies its internal settings.

The generator produces another batch of pictures to increase how realistically it is created.

With its improved knowledge, the discriminator assesses the fresh batch of photos and offers input once again.

Iteration:

- The interaction between the generator and discriminator continues during iterations. The discriminator becomes better at discerning real photos from fake ones, while the generator produces realistic images with each iteration.

- The discriminator wants to improve its ability to correctly recognize the created pictures while the generator attempts to produce images that are more and more like real images over time.

With one seeking to outwit the other, the training process alternates between the generator and discriminator. Both elements are constantly being pushed to do better by this antagonistic connection.

In this example, a generator and a discriminator are pitted against one another in a competitive training process to demonstrate how GANs function. The discriminator learns to distinguish between genuine and created pictures, while the generator learns to create realistic ones. Together, they provide a practical framework for producing artificial data that closely mimics actual data, presenting fascinating opportunities for generative modelling.

Conclusion

Generative Adversarial Networks (GANs) have revolutionized the field of generative modelling by offering a solid foundation for producing high-quality, realistic synthetic data. I hope this blog clears your understanding of GANs and they work. Next time when you hear sometimes say Drag Your GAN, you will feel a little confident that you are have already known it as I have! Stay tuned with me for more informative tutorials on such topics.